The INP, an acronym for “Interaction to Next Paint”, is a web performance metric introduced by Google in May 2022 to succeed another performance indicator, the FID. Available in beta since February 2023 via the “Experimental” flag, it makes its official entry into the Core Web Vitals this month, on March 12, 2024 to be precise.

In this dedicated article, we’ll explain everything you need to know about this webperf metric, including how to make sure you’re in the green, or at least as close to it as possible. This is a goal you absolutely must aim for, as the INP now impacts the Core Web Vitals, which are themselves a criterion in Google’s search engine results page ranking algorithm.

Evolving Google’s algorithm: a necessity

Before delving fully into the means of measuring and then optimizing the INP, it seems essential to return to the question of “why”. After all, Google and site publishers have three Core Web Vitals metrics at their disposal, which seemed to meet their objectives, didn’t they? So why introduce a new metric, almost five years after its launch, and shake up the habits of site publishers, web agencies and freelancers?

From relevance to quality measurement

Like many website publishers, web agencies and SEO experts, part of your day-to-day work probably involves keeping a close eye on updates to Google’s results ranking algorithm. Historically, these updates were primarily aimed at ensuring the relevance of content via a growing number of complementary mechanisms:

- identification of low-quality text (spam in particular), duplicate content and, more recently, AI-generated content;

- improved authority scoring through detection of “unnatural” netlinking techniques: spam, link buying, satellite site networks, etc

Since 2020, Google has supplemented its “Core Updates” and “Spam Updates” with a new type of update designed to assess the quality of pages and websites. With these, the firm aims to assess the level of user satisfaction, and not just the intrinsic relevance of content and its popularity. This UX-oriented approach is, for the company, the best strategy for maintaining its leadership in online search.

Evaluating and, a fortiori, measuring User Experience is no simple matter: it’s a vast field which, in the web world, involves professions as varied as web design, security, accessibility and front-end development. With this in mind, Google has deployed its “Page Experience” component, which is based in part on the “Core Web Vitals”. And that’s what we’re going to talk about now.

Core Web Vitals for measuring the real User Experience

Core Web Vitals” are a set of metrics devised by Google engineers to mathematically measure the quality of the User Experience. To collect figures for a component which, from the user’s point of view, largely involves the subconscious and emotions, they had to define the basics of what web performance actually is. This approach enabled them to identify three key components, each materialized by a metric.

Perceived speed: LCP

LCP, an acronym for “Largest Contentful Paint”, calculates the loading speed perceived by users. While the Speed Index would have been more appropriate for this exercise, Google had to come to terms with the reality on the ground, opting for an indicator that was less costly to calculate. That’s why the LCP calculates the time it takes to display the main element visible in the viewport when a page first loads.

In fact, the correlation between Speed Index and LCP is significant, making LCP a highly relevant metric. If we had to keep only one, LCP would undoubtedly be the one to keep.

Visual stability: CLS

CLS, an acronym for “Cumulative Layout Shift”, aims to calculate the consistency of a web interface over time. There’s nothing more disturbing for a user than browsing a page in which the elements move around anarchically. The aim is to ensure that interactions with a page are the result of the user’s will and not of poor manipulation. It’s an excellent way of measuring potential frustration.

Once again, the indicator plays its role perfectly, shedding precise light on the technical problems of pages.

Interface responsiveness: from FID to INP

It’s in this third section that we get to the heart of the matter. Since the introduction of Core Web Vitals in 2019, page responsiveness has been calculated via FID, an acronym for “First Input Delay”. A particularly self-explanatory indicator : it measures precisely what it says it does.

However, FID was hardly representative of real user experience in terms of responsiveness, for two main reasons:

- it only measured the first interaction, not subsequent ones. Users spend an average of 90% of their time browsing a page, so the first interaction represented only a tiny fraction of what they actually experience;

- it only measured latency prior to JavaScript execution, not the full latency as perceived by the user. The delta between the two could therefore be significant, with FID being largely underestimated most of the time: the first 100 ms threshold was rarely reached, making the metric less interesting.

To correct these shortcomings, Google introduced the INP, an acronym for “Interaction to Next Paint”. With this new metric, we place ourselves from the user’s point of view: what we’re looking to achieve is a visible result on the page, and no longer the execution of a meaningless JavaScript callback function. And this throughout browsing sessions, not just when pages load.

When a user leaves a web page, the maximum value is taken into account and becomes the INP from the Core Web Vitals point of view. This approach makes sense insofar as it aims to ensure that performance levels are consistent over time.

How is INP measured?

As with the other Core Web Vitals, the engineers have established a precise methodology for calculating a page’s INP. The aim is to ensure that whatever tool is used, the results are consistent.

How is INP calculated?

Unlike its predecessor FID, INP can currently only be obtained through Chromium-based web browsers, i.e. Google Chrome, Microsoft Edge, Opera and the Samsung mobile browser. Neither Firefox nor Safari have access to such an API. Let’s take a look at how the metric is actually calculated.

Three steps instead of one

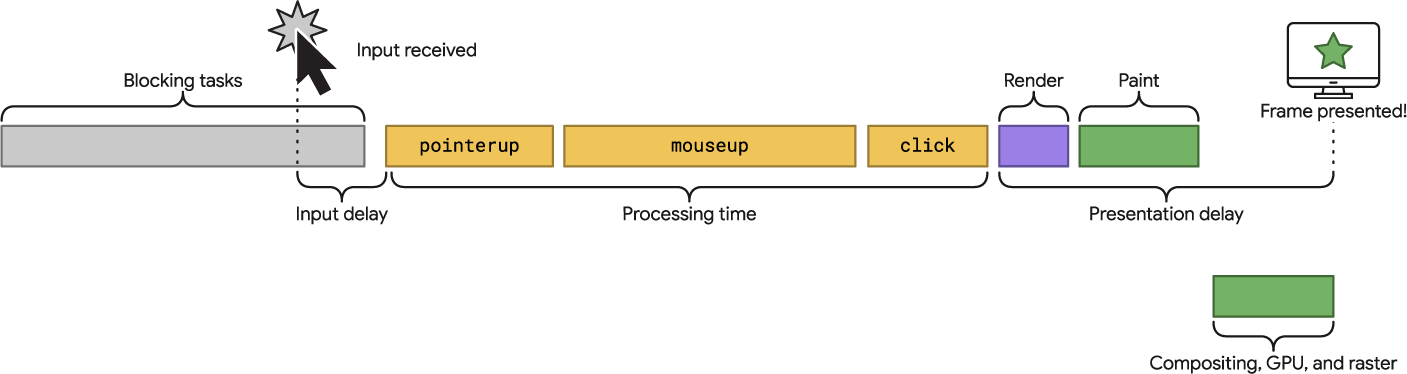

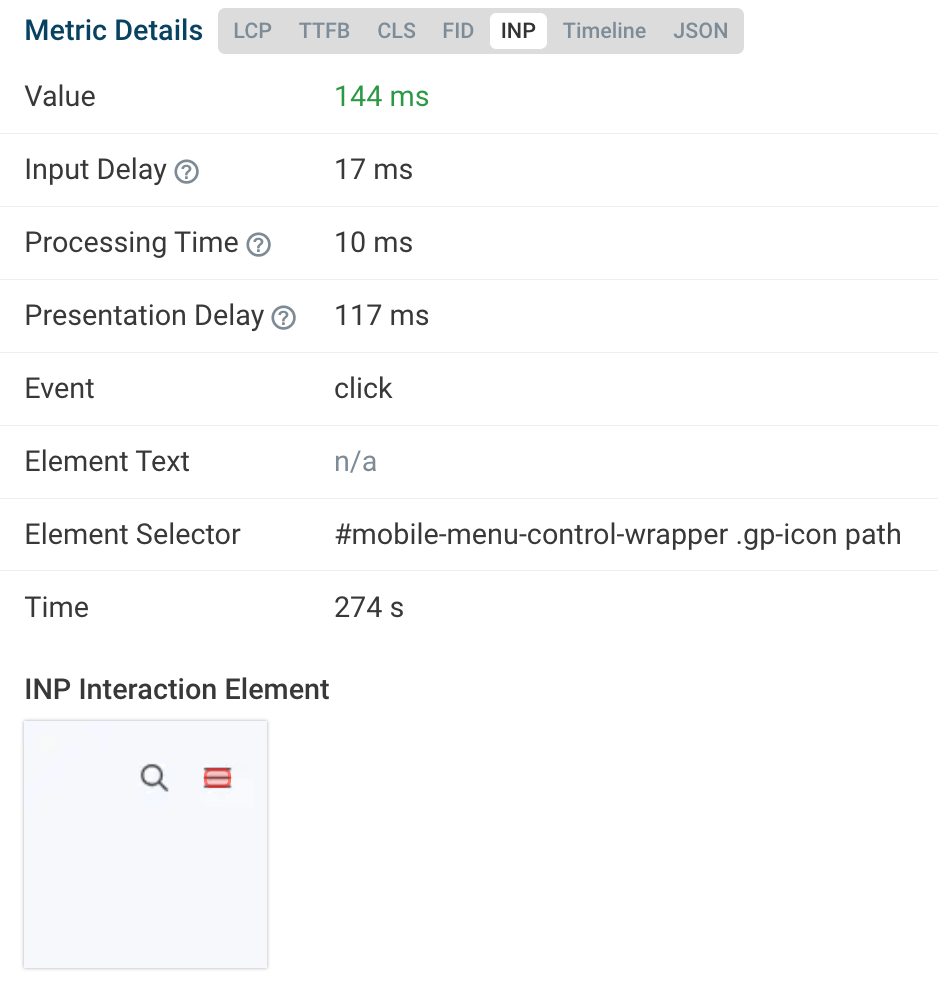

As we saw earlier, INP goes further than FID in terms of measurement. During an interaction such as clicking, touching a touchscreen or keyboard input (but not scrolling or hovering), the metric is calculated by adding up the duration of three main stages:

- input delay, which includes FID and calculates the latency between user input and the triggering of the corresponding event ;

- processing time of the interaction itself via JavaScript, CSS or native browser functions;

- displaying the updated page on screen, which involves a rendering and painting phase (described in greater detail later).

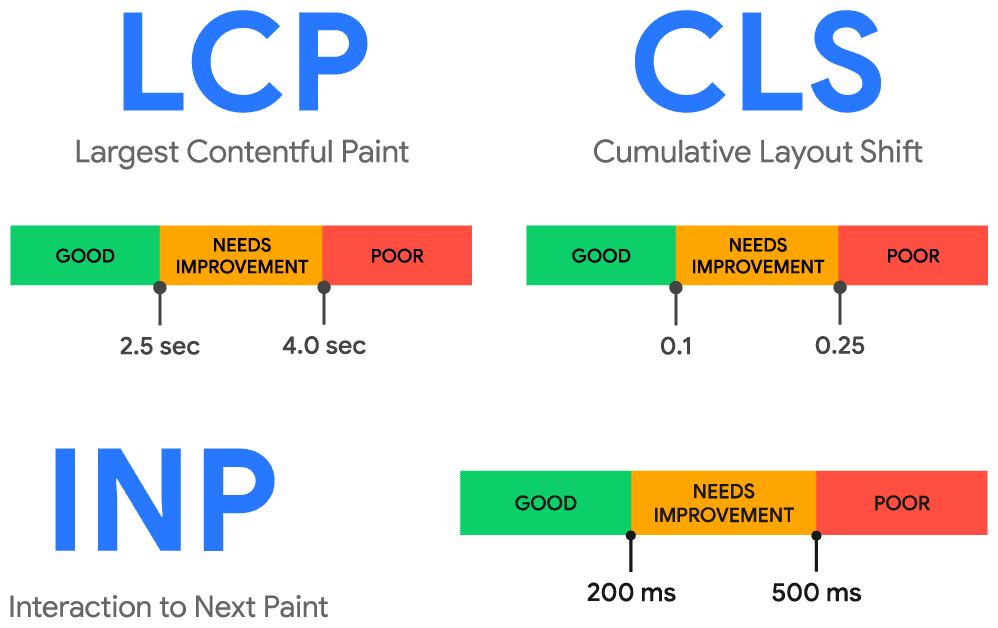

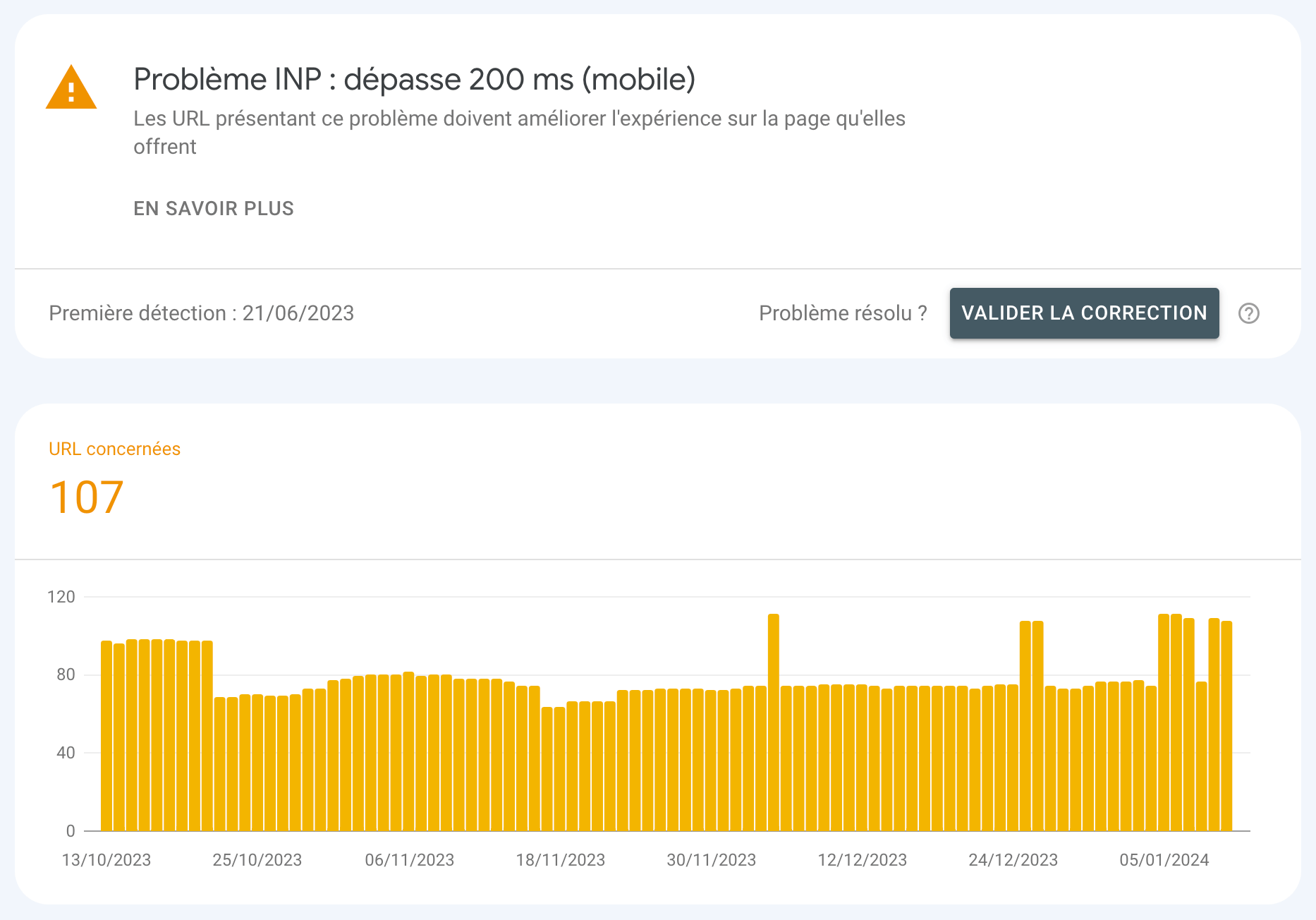

As this data is temporal, the unit of measurement for INP is the millisecond. To offer a quality user experience in terms of responsiveness, Google engineers have estimated that it is necessary to be under 200 ms. The threshold is thus more generous than for FID, which makes sense since more elements are measured. Conversely, exceeding the 500 ms threshold (half a second) causes the indicator to fail.

High variability

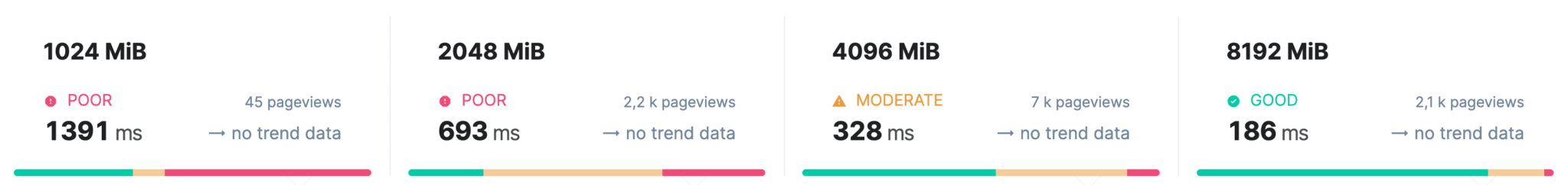

We explained that INP measures a sum of latencies and processing times, without detailing why this might take time. The answer is right in front of you: it’s your hardware, and more specifically the computing power of your microprocessor and the amount of RAM it has at its disposal. JavaScript execution and visual rendering are two extremely costly operations for a CPU, which carries out the majority of processing in a single thread.

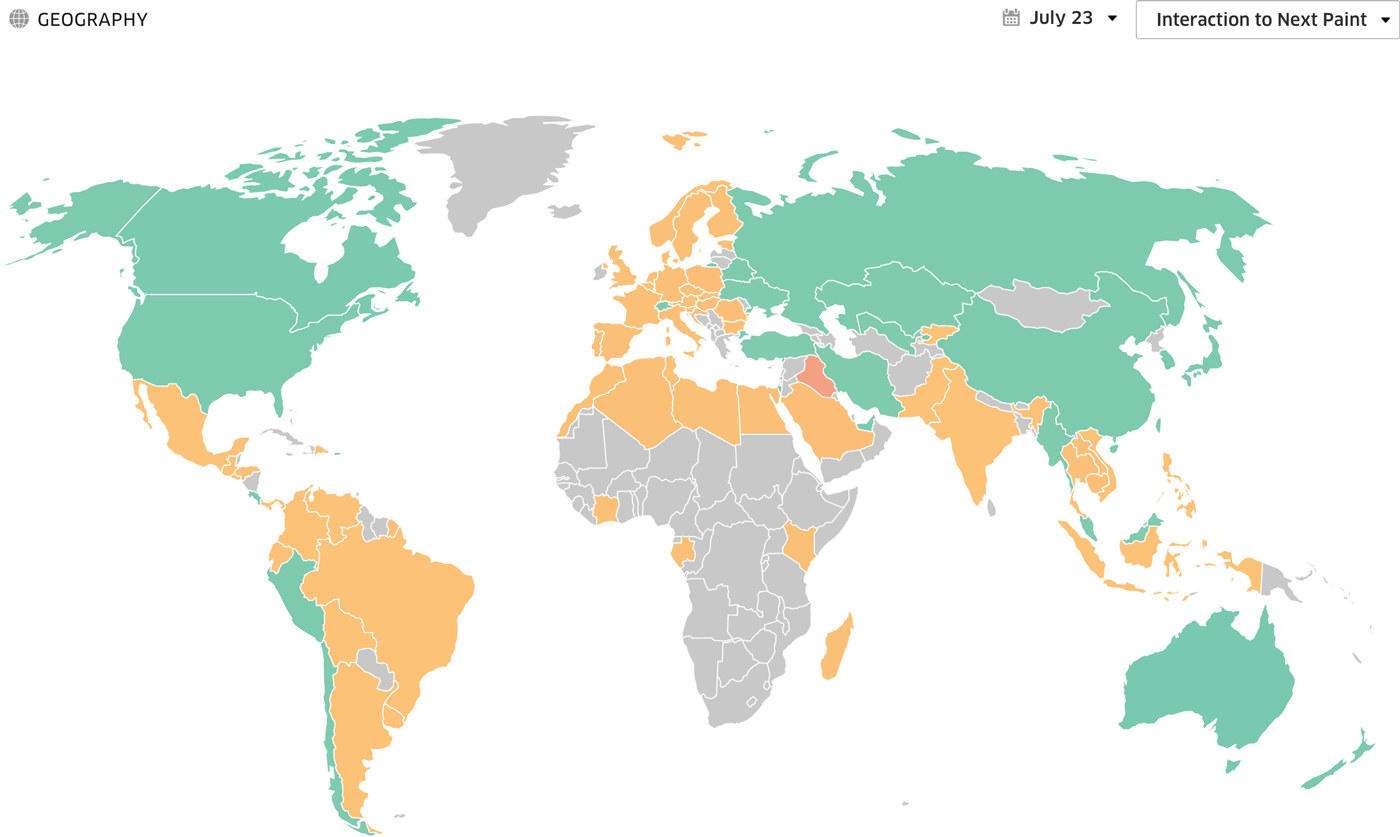

As a result, the latter quickly and regularly reaches saturation point, generating the notorious “Long Tasks” (more than 50 ms execution time). In concrete terms, this translates into great variability in the metrics collected from users: a desktop visitor will not have the same level of performance as a mobinaute. And even among smartphone users, the operating system, system settings and browser are all factors of variability.

As Google’s objective is to provide metrics that are understandable and actionable by site editors, the data is systematically broken down into mobile users on the one hand, and desktop users on the other. This is a logical grouping, given the differences in experience between these two families of terminals: screen size, pixel density, input mode, attention level… We are indeed dealing with two totally different experiences.

75th percentile over 28 days

In view of the variety of experiences, it is impossible for most sites to aim for 100% performance, for the INP as for other metrics. With this in mind, Google has opted for an approach based on thresholds at the 75th percentile. This means that to validate the INP, at least 75% of your users must have a good INP (i.e. under 200 ms).

The consensus is that this approach guarantees good representativeness (unlike the 50% threshold used previously), while not being as demanding as the 90th percentile threshold sometimes evoked by Google teams. Nothing motivates like a reachable goal.

When analyzing the data for a url or Origin in a report provided by Google, the 75th percentile is materialized by small black pins. Each one is topped by a value corresponding to the consolidated average for all users.

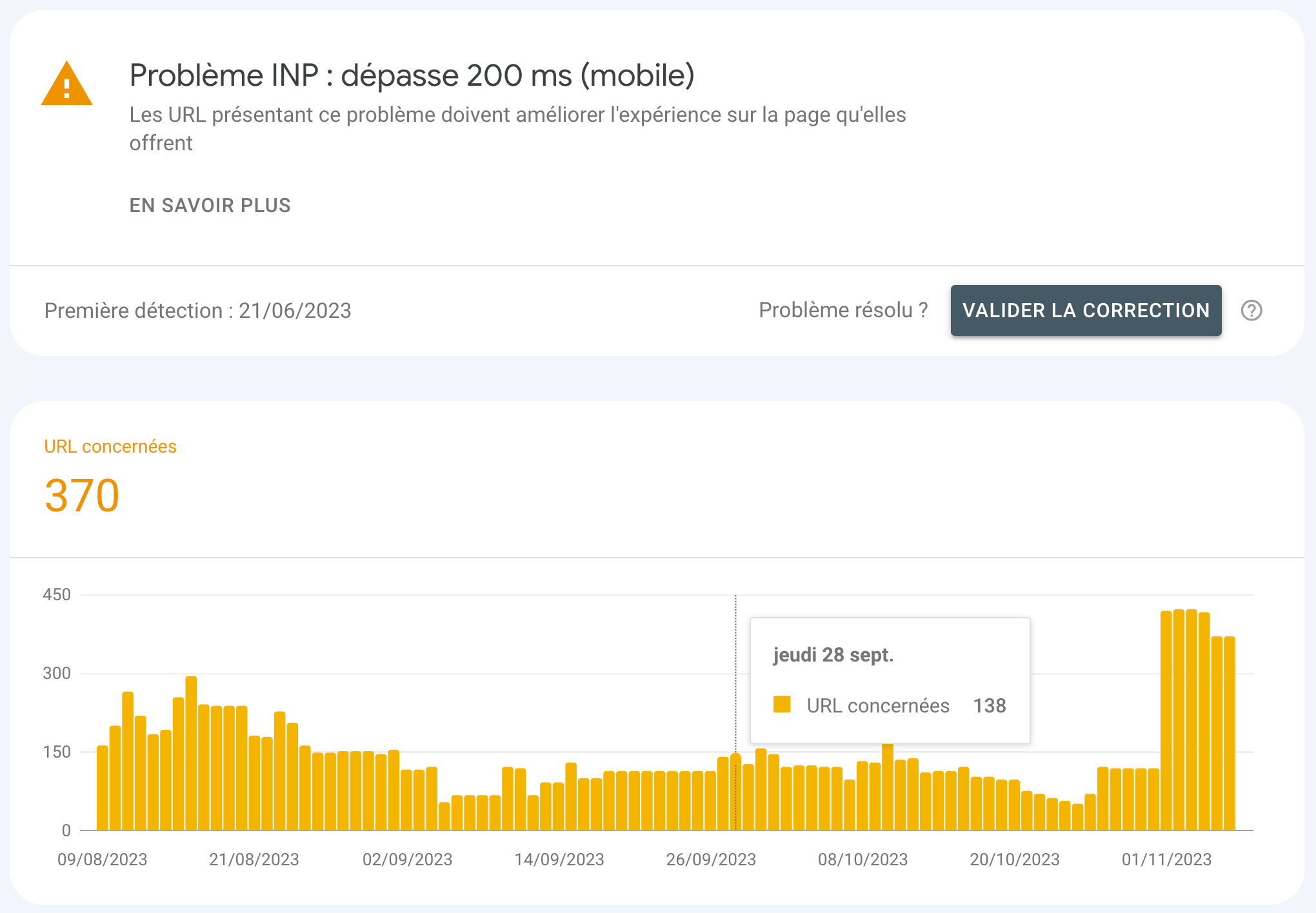

Finally, it should be noted that, for reasons of calculation costs, the data shared by Google is smoothed over a rolling 28-day period. This form of inertia makes it difficult to use data from official Google tools to monitor performance. On the other hand, it avoids the “roller-coaster” effect that would lead to massive alerts being sent out at the slightest update or cache flush.

Which tools should be used to measure INP?

As we’ve already seen, Google collects INP via its Core Web Vitals browser API. But is it the only one to do so? And how can such data be used to steer a web project and make the right decisions? Let’s take a look at some of the tools available to answer these questions.

The Chrome User Experience Report

We’ve already covered the ecosystem of tools developed by Google, without actually introducing it. This shortcoming is now rectified with a proper presentation of the “Chrome User Experience Report”, or “CrUX” for short. The tool is a gigantic database containing performance data collected from users via the famous API integrated into Chromium-based browsers (Google Chrome, Microsoft Edge).

CrUX data is the same as that used by Google for all its reports: Core Web Vitals in Search Console, the “Discover your users’ experience” section in PageSpeed Insights reports… As this data is freely accessible, it can also be accessed via APIs and BigQuery, enabling you to go far beyond what Google offers in terms of formatting and freshness.

If you want to go beyond the meagre interface offered by Google in its Search Console or access recent data, we recommend using a third-party tool such as Treo or Speetals. The latter are able to retrieve data from CrUX and format it in a more intelligent way, so you can benefit from truly operational dashboards. In particular, they enable users to be filtered by geographic origin, or to view 3 years of history.

Field data

The great strength of CrUX is its fully integrated and automated operation: data is collected no matter what, with no need for site editors to request it. But just because this data exists unconditionally and free of charge doesn’t mean it has to be at the heart of your optimization or webperf monitoring strategy.

In fact, it’s possible to collect this same data via a JavaScript tag, which removes the many constraints inherent in CrUX. The sample is notably extended, since a growing number of metrics are available in browsers such as Safari and Firefox (in particular the LCP since version 121). Depending on the configuration adopted, “Real User Monitoring” (“RUM”) tools can also be used to dig deeper into the metrics collected:

- highlighting single visits vs. multi-page visits, where the browser cache significantly improves performance ;

- highlighting of page views taking advantage of the “Back Forward Cache” mechanism, which guarantees very low metrics;

- highlighting of pages with a masked visibility state: when opened in a masked tab, these pages generally show a significantly reduced performance;

- create groups of pages relevant to a given activity. Example: category pages, product pages and SEA landing pages for an e-commerce site.

On the INP side, RUM-type tools can also be used to retrieve interaction data to identify the components responsible for interaction latency. This contextualization brings considerable added value, because unlike LCP and CLS, INP is only available within field data, since it requires page navigation.

When it comes to integrating such functionality into your technical stack, you have plenty to choose from. The various tools on the market are all based on the native Performance API, which enables user data to be retrieved via the JavaScript method performance.getEntries() or via an instance of PerformanceObserver. In absolute terms, it is even possible to develop your own tool for collecting, processing and displaying this data.

But the most accessible solution is to turn to a SaaS solution such as RUMvision, DebugBear, Dynatrace, New Relic, Raygun or Contentsquare. Bear in mind, however, that the latter require the deployment of front-end JavaScript… and can therefore generate Blocking Time and contribute to an increase in your INP. So it’s up to you to decide whether it’s worth the risk.

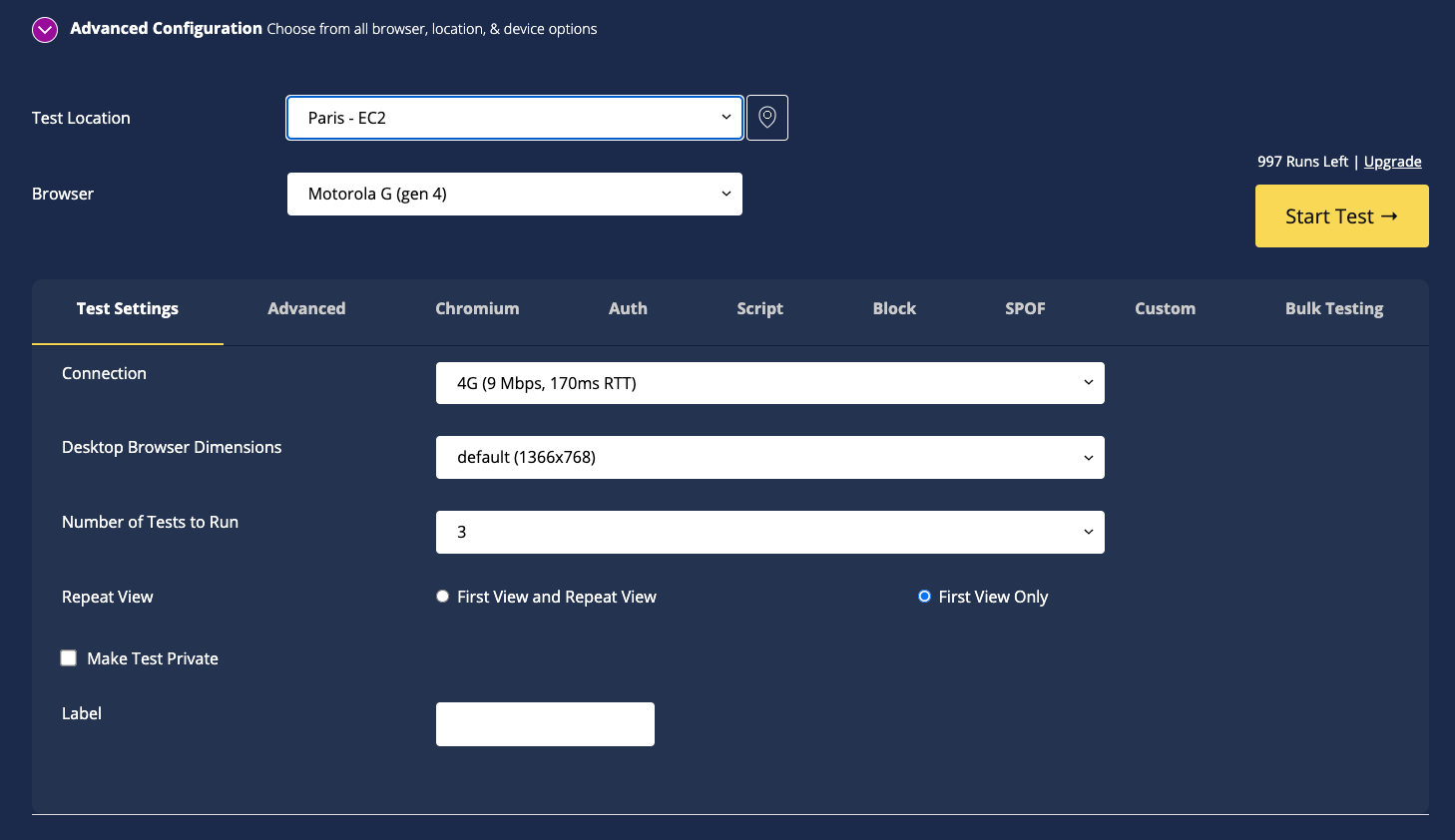

Synthetic data

Synthetic data is collected by a family of tools that perform tests under “lab” conditions. The idea is that they reproduce a user visit with a well-defined context: network bandwidth and latency, CPU power, screen dimensions and pixel density, user agent… Their main interest is comparison, either with competing pages, or over time. In particular, they are ideal tools for monitoring performance and quickly identifying any performance regressions.

As mentioned above, however, INP is not available in reports of this type, and for good reason: these are robots that do not interact with pages as real users do. The solution is to turn to the “Total Blocking Time” (“TBT”) metric, which is highly correlated with INP. Both are highly dependent on the volume of JavaScript executed.

There are many players offering tools of this type, the most popular of which are WebPageTest, GTmetrix, Treo and Contentsquare Speed Analysis. For a comprehensive view of performance, it is advisable to monitor both field data and synthetic data.

Development Tools for your browser

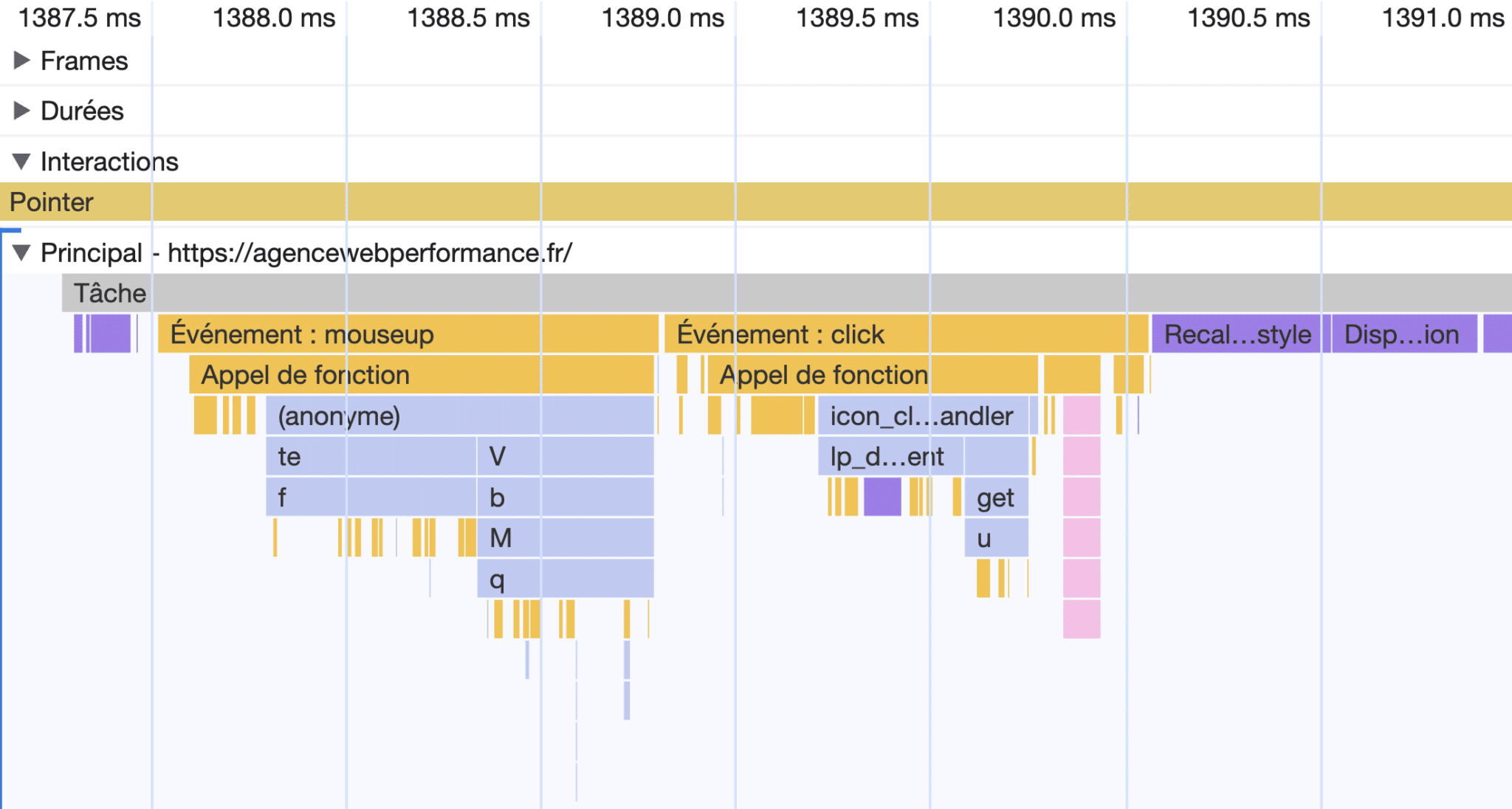

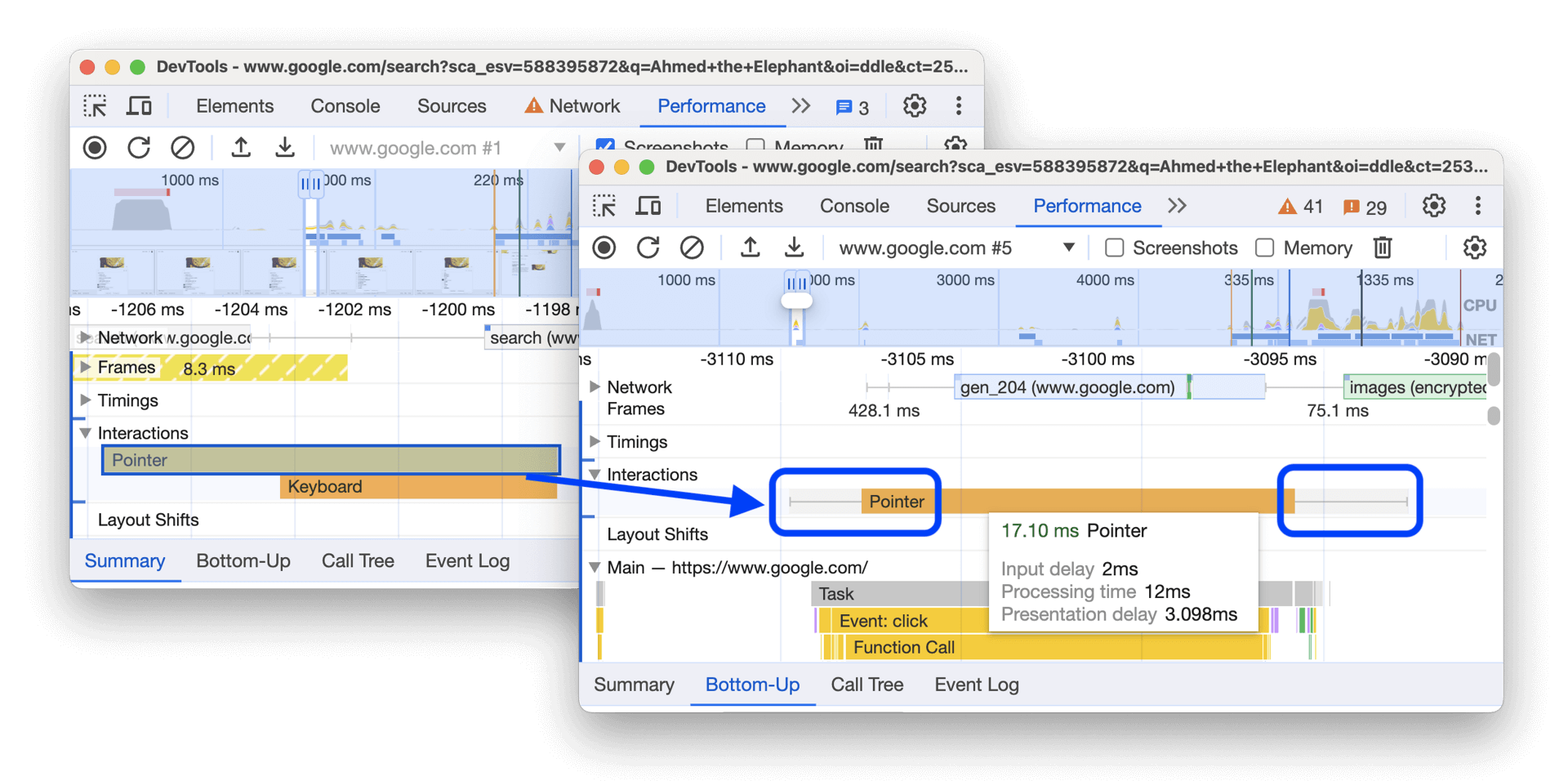

Because you are, after all, a web surfer like everyone else, there’s nothing to stop you using your own web browser to test your site’s performance and identify any weaknesses on the INP side. Google Chrome’s development tools, also known as “DevTools”, feature an extremely sophisticated tool designed specifically for this purpose: the “Performance” tab, accessible via the menu and then “Other tools”.

Using it, and in particular reading its timeline and “flame charts” (so called because of their visual appearance), you can identify the JavaScript code triggered after each type of interaction, as well as the repaint and reflow operations executed by the browser in the wake. Ideally, the main task should be as short as possible and the number of elements triggered as low as possible.

For technical profiles, this is the ideal place to identify THE JavaScript function that penalizes your INP. Note Google’s recent efforts to make the tool more comprehensible (cf. the changes in Chrome 121).

We won’t go into detail here, as understanding this tool requires a solid grounding in front-end web development. Fortunately, there are an official and well documented documentation and, for the more motivated, even more advanced solutions for detailed profiling of the “traces” generated by browsers. These can be exported in Json format for viewing using tools such as Perfetto UI.

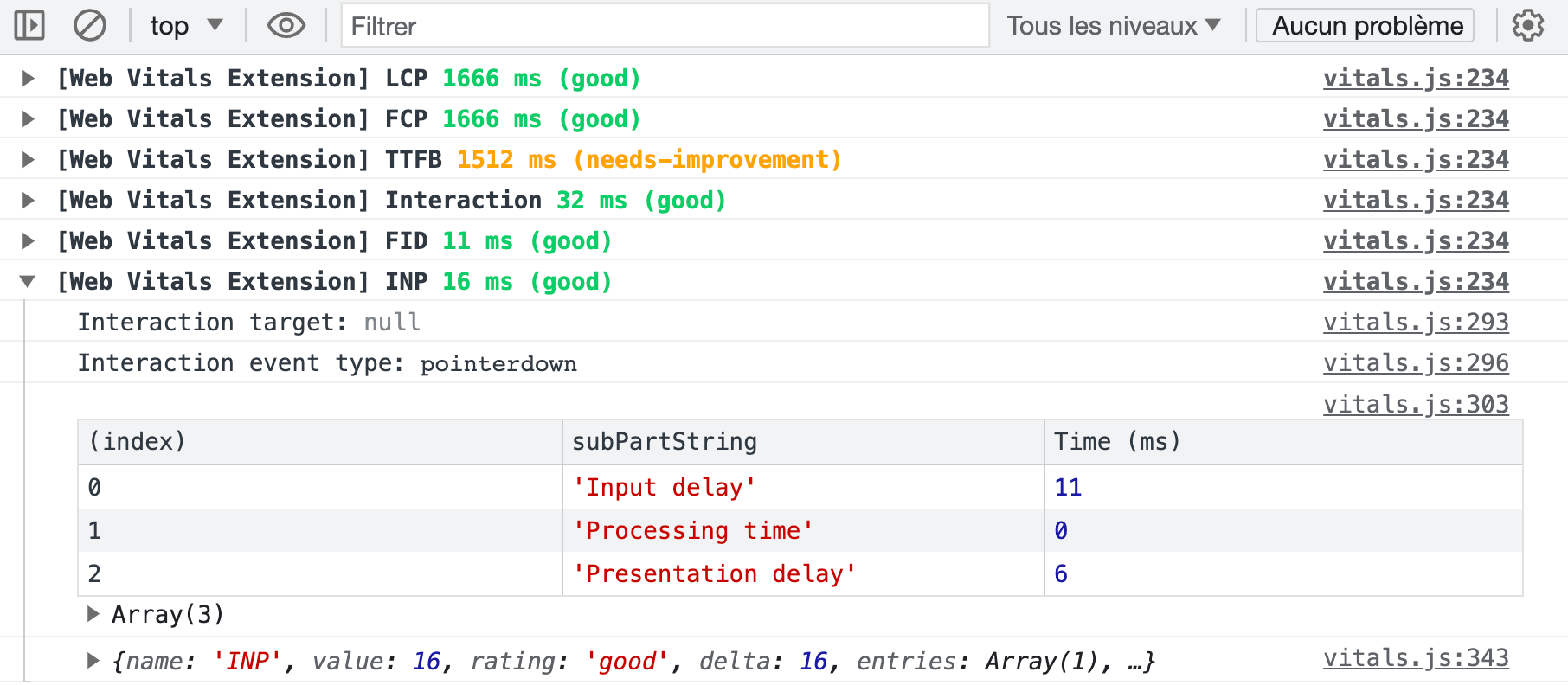

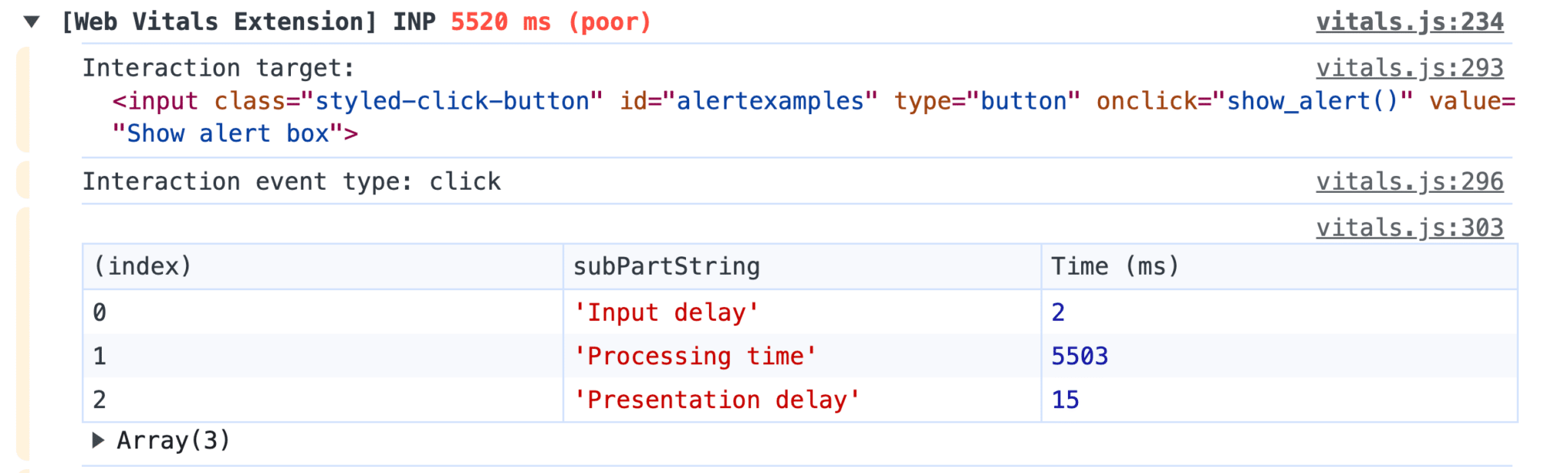

The official Web Vitals browser extension

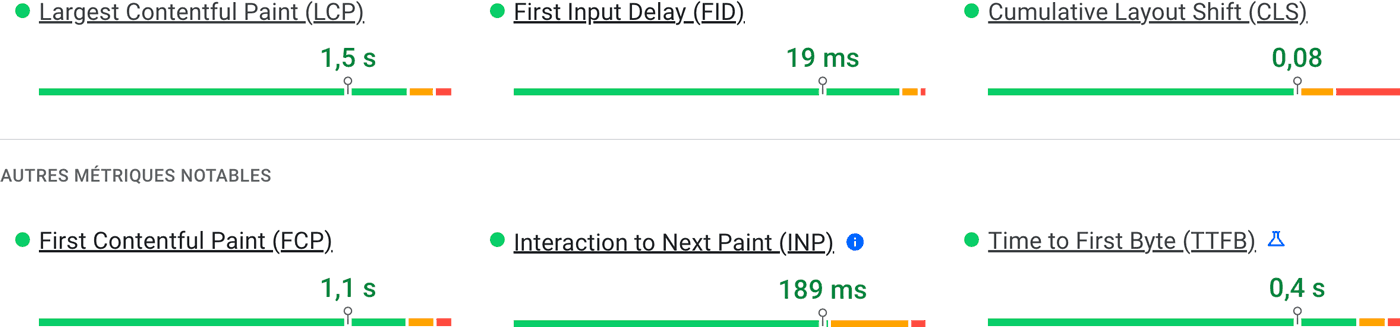

For less technical users, there’s a “Web Vitals” extension for Google Chrome. After enabling metrics logging in the console via its options, it displays all data collected by Google Chrome’s integrated Web Vitals API in real time. Each interaction with a page logs an Interaction event, with a breakdown of the three stages that make it up in a table.

In the example above, we can see that the 16ms of INP are linked to an input delay (11 ms) as well as to the rendering delay (6 ms). The 16 ms threshold is a textbook case, since on a conventional computer monitor with a refresh rate of 60 hz, a new image is generated every 16.66 ms (1000 ms / 60). As the browser is unable to generate an image before the next refresh cycle, the INP is in fact rarely lower than 16 milliseconds.

We are now able to access our users’ performance data, which will enable us to move forward in trying to understand why some users experience high INP.

What impacts INP?

JavaScript execution

As previously mentioned, INP is a metric that is highly sensitive to the volume of JavaScript present on a page. The metric is directly correlated with the number and, above all, the weight of the .js files called: not only does a heavy script consume bandwidth when downloaded, it also consumes significant CPU and RAM resources to execute (remember the correlation between the INP metric and the amount of RAM available).

This is why INP is also strongly correlated with the following metrics:

- Long Tasks: tasks that saturate CPU resources for more than 50 milliseconds.

- Blocking Time: the script execution window that effectively penalizes interactivity. If a long task lasts 87 milliseconds, the Blocking Time will be 37 ms (87 – 50 ms).

- Long Animation Frames (LoAF): this is a new API designed to eventually replace Long Tasks, rather like INP with FID. It offers an even higher level of understanding of the reactivity issues that can impact animations.

Third-party JavaScript, which generally weighs several tens of kilobytes, has a particular impact on these metrics. If we had to classify the most impactful families, in an inevitably somewhat arbitrary way, it could look like this:

- A/B Tests, which by their very nature modify page content early in the rendering phase.

- Ad tags such as Google Ads, Taboola and ad network scripts that perform real-time attribution, or “real time bidding”, to increase revenues.

- Behavioral analysis tools such as Heatmap, Hotjar and Mouseflow.

- Chatbots, especially conversational tools based on AI, often developed with questionable technical mastery.

- Embedded videos, especially YouTube, but also Vimeo.

- Social inserts which, in addition to relying on JavaScripts tags, load iframes calling up multiple scripts and resources.

Since these tools are often used in conjunction with one another, and may be called up via an equally costly Tag Manager, they drastically increase the INP, penalizing the User Experience and reducing your efforts to pass Core Web Vitals. But it’s not just third-party scripts that are to blame. A handful of other tool families regularly integrated into themes or technical stacks can also take their toll on INP:

- Browser functionality detection libraries like Modernizr;

- Date manipulation tools such as Moment.js;

- Some poorly developed polyfills;

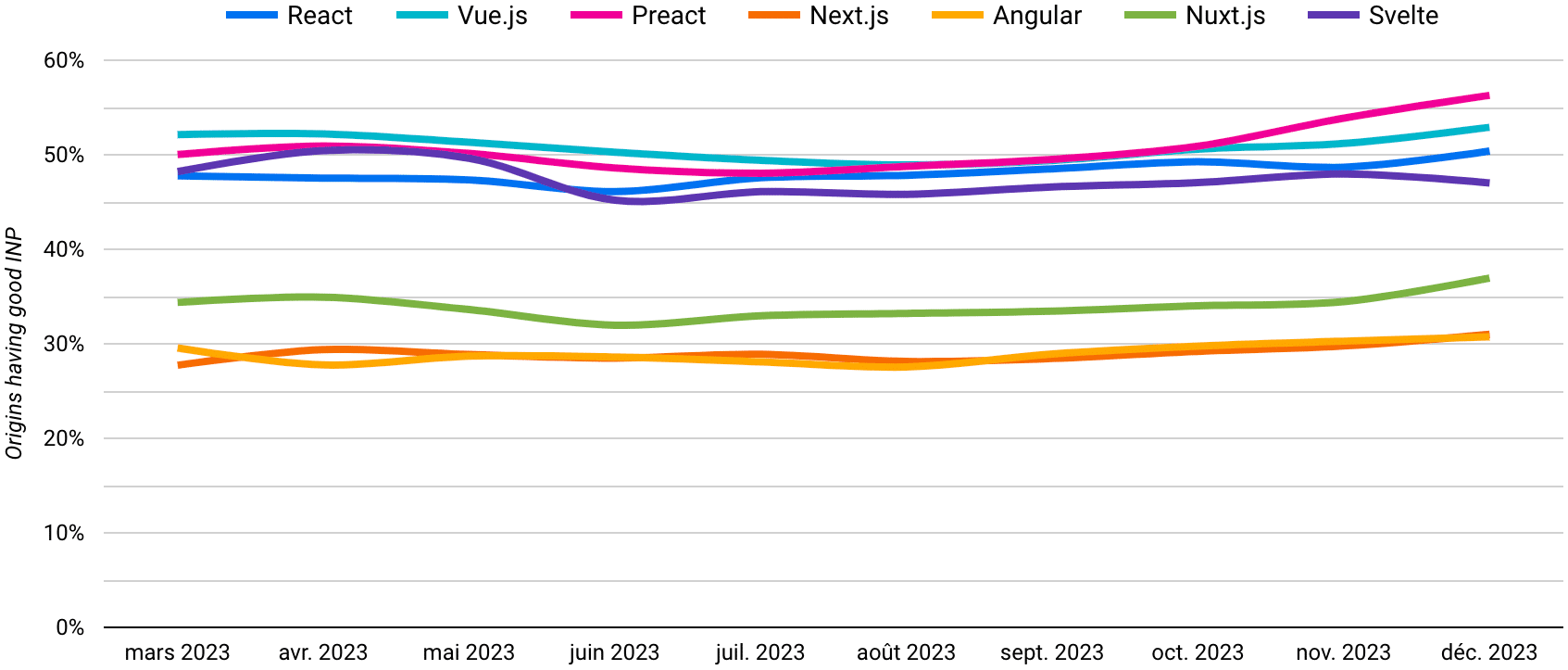

- … And all the JavaScript frameworks, from the traditional jQuery to the more modern React and Vue.

Finally, it should be noted that certain functionalities based on asynchronous requests (Ajax) can easily exceed the critical 500 ms threshold. This is because between a user’s click on a vote button (for example) and the on-screen display of the number of votes updated, the browser has made an exchange with the server, which has itself made a database query… And this rarely takes less than half a second.

Browser rendering

INP measures display latency following user interaction. As we saw earlier, this represents around 16 ms when all goes well, most of which is related to the rendering phase. Each of these stages consumes CPU resources:

- Style: the process of identifying which CSS selectors correspond to which parts of the DOM (e.g.

.body-footertargets the site’s footer…). - Layout: set of geometric calculations used to position elements on a 2-dimensional plane, both absolutely within the body of the page, and in relation to each other.

- Paint: the process of filling in elements on the screen: text, colors, images, borders, shadows… At this point, the browser also groups elements by “layers”.

- Compositing: display of different “layers” according to their visual depth in relation to each other. This applies in particular to elements positioned in absolute z-indexes.

In some cases, however, this rendering phase can take longer, without JavaScript even being involved in the process. This situation, which is much more common than you’d like, is linked to site design itself, and in particular to the following aspects :

- html code of pages: a complex DOM structure with numerous nodes and multiple successive nestings increases the consumption of CPU resources, and therefore the time required for page layout calculations;

- Non-optimal CSS: poorly implemented styles can generate multiple repaints and reflows on interaction. The invalidation and recalculation processes implemented by the browser then block rendering and cause the INP to explode.

This problem of non-optimal rendering is not only penalizing during the initial rendering phase, but also afterwards. When a user interacts with a page and an element is modified within it, the browser performs costly calculations to estimate whether other elements on the page should also evolve. In this way, it traces the DOM tree back to the root element, i.e. the <body> tag.

How can INP be improved?

Based on these observations, we know that we need to work on optimizing JavaScript and rendering. But what does this mean in concrete terms? Let’s take a look at some unfortunately common examples.

Optimizing JavaScript code

Reducing the volume of JavaScript

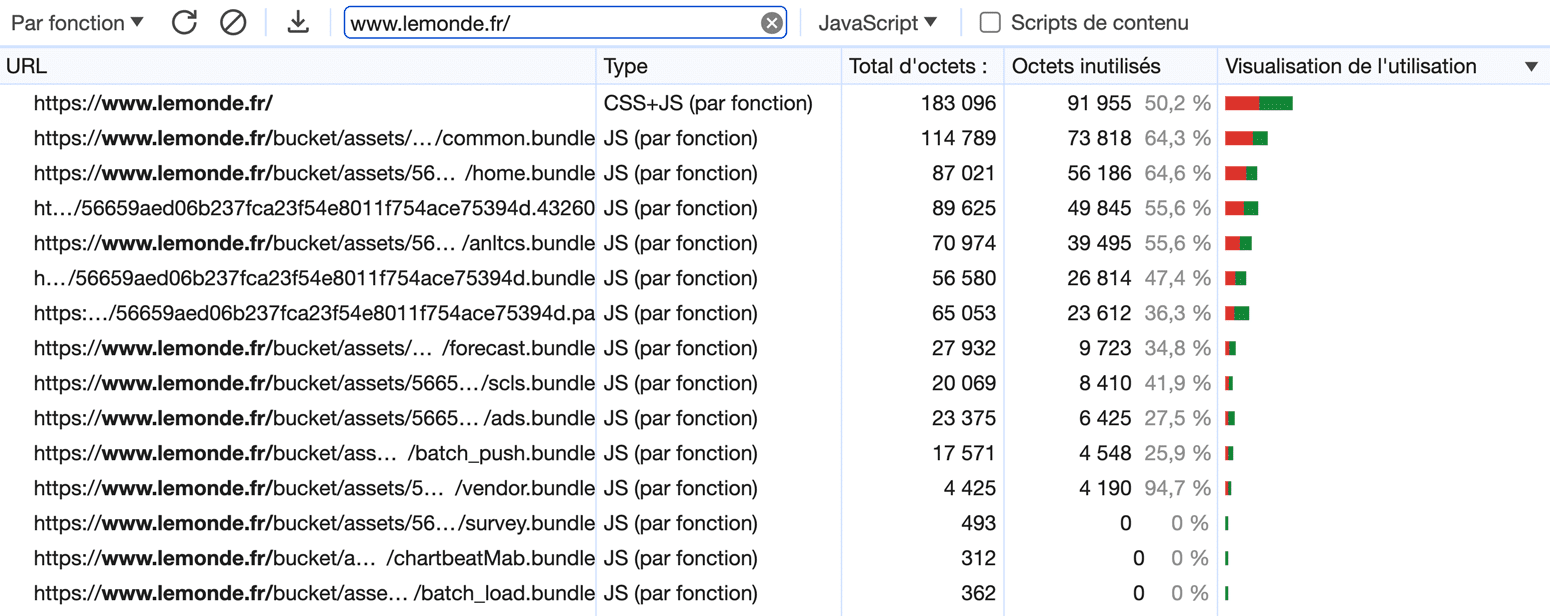

The first step, as logical as it is effective, is to reduce the volume of JavaScript called up in pages. I’m not the one who invented it: it’s an official recommendation of the webperf gurus working in Google’s teams. It’s all about using common sense and deleting anything that isn’t strictly useful, at the risk of sometimes having to arbitrate between what’s necessary and what’s “nice to have”.

Each kilobyte of JavaScript saved potentially translates into an improvement in INP with, as always, an advantage on the Core Web Vitals side, but also on the User Experience side. The tool of choice for initiating this identification mission is the “Coverage” tab of the development tools (capture above). It allows you to detect the percentage of use of each JavaScript file, both own and third-parties.

This approach can prove complex for developments based on dependency managers such as Composer and npm, which tend to make the volume of JavaScript explode in a rather opaque way. In all cases, the logic is to use the most specific tools possible, or even custom developments, to ensure that the weight of the bundles is as low as possible.

Downloading and executing scripts asynchronously

The html async attribute, generally associated with script calls to improve performance, is a double-edged sword. On the one hand, it makes calls non-blocking for rendering, which has a positive impact on load time metrics such as FCP, LCP and Speed Index. But on the other hand, it makes their execution chaotic, because although they are downloaded asynchronously, they are executed mechanically in the same breath.

In concrete terms, this means that “async” scripts can block the rendering of a page at any time in order to execute. If you remember, browser rendering and JavaScript execution are the two most costly operations for the browser. Sequencing phases of one and the other is a worst-case scenario in which Long Tasks, TBT and INP are directly impacted.

The solution for ensuring asynchronous behavior without blocking rendering is the defer attribute. Not only is this attribute asynchronous on download, it also ensures that scripts only run once pages have been fully rendered. Please note, however, that the attribute can only be used with external calls via an html <script> tag. Passing a call from Google Tag Manager to defer thus requires some reworking:

<script src="https://www.googletagmanager.com/gtm.js?id=XXXXXX" defer fetchpriority="low"></script>

<script>

window.dataLayer = window.dataLayer || [];

window.dataLayer.push({'gtm.start':new Date().getTime(), event:'gtm.js'});

</script>Splitting long functions

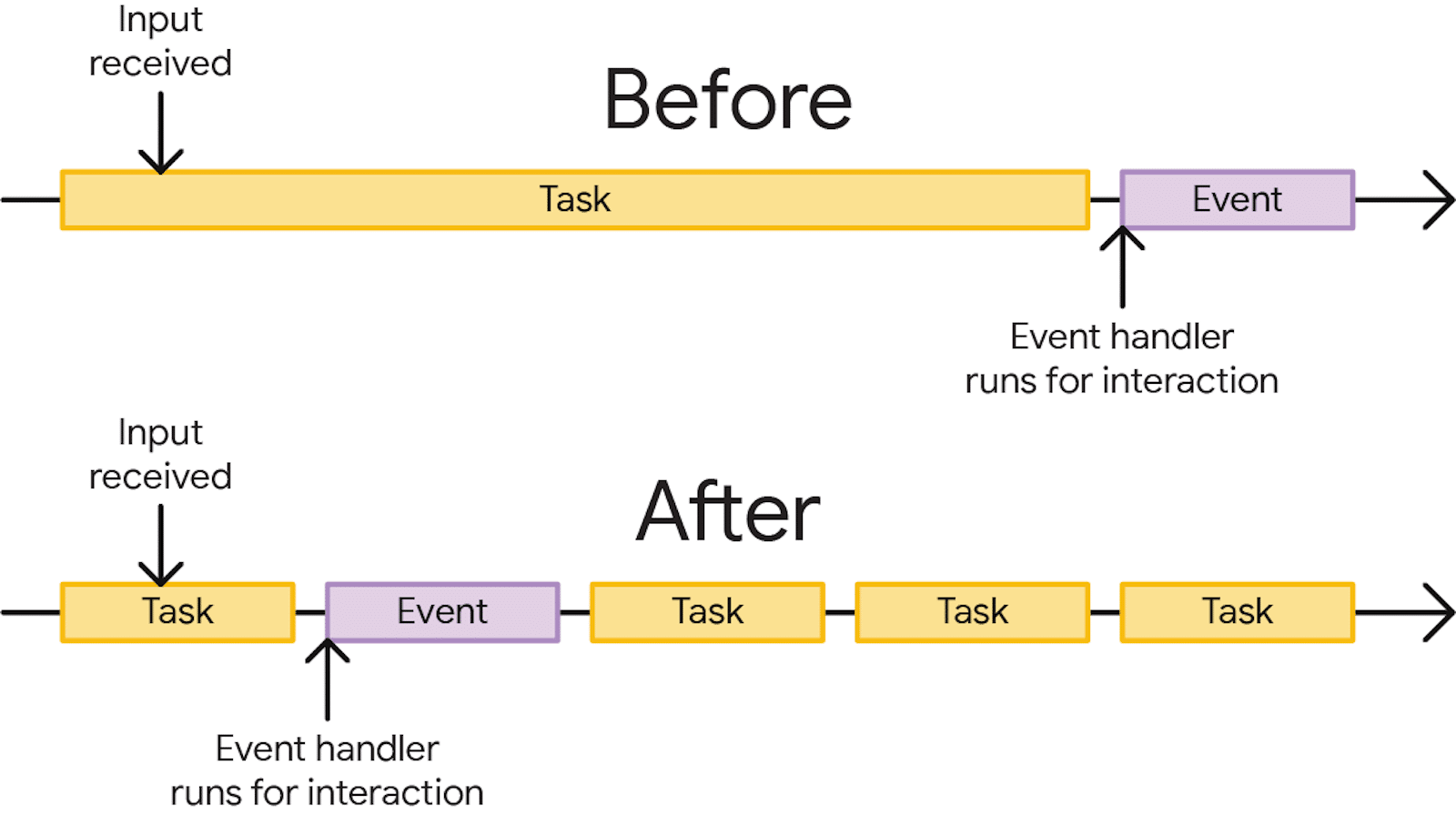

Long Tasks are a major factor in increasing INP. This explains the strong correlation between INP and TBT. However, Long Tasks are generally the result of monolithic JavaScript functions: as the browser is only capable of executing code with a single CPU thread, the latter quickly reaches saturation and slows down the entire page.

The solution is to divide up the heaviest functions into shorter tasks to be executed, ideally under 50 ms. These tasks are then called up in a chain, with the possibility for the browser to handle any user interaction or rendering in between. Four tools are available for this purpose.

setTimeOut(), a classic but misused tool

Traditionally designed to delay the execution of a function after a set time, setTimeOut() can also be used to execute a task by decorrelating it from the parent task. A value of zero is used for this, implying an “immediate” trigger:

setTimeout(() => this._loadDelayedScripts(), 0);However, this method is not the most efficient in this role. In particular, it doesn’t allow you to maintain prioritization between the various sub-tasks chained together in this way.

requestAnimationFrame(), ideal for visual rendering

More modern and better adapted to yielding constraints, requestAnimationFrame() is a JavaScript method designed to create fluid, efficient animations. Unfortunately, its support is still only partial, but you can easily switch to the classic setTimeOut() if your browser doesn’t support it.

As this method updates the animation before the next screen refresh, it’s an ideal option for yielding rendering functions. The latter will be automatically synchronized with the next browser refresh cycle, regardless of its display frequency. It can be used as follows:

window.requestAnimationFrame(() => {

document.querySelector('.share-buttons-toggler').remove();

});requestIdleCallback(), for background tasks

This method is used to schedule tasks during periods of browser inactivity, thus de-prioritizing certain operations over others. It is highly effective for executing non-urgent tasks without compromising page performance and responsiveness.

requestIdleCallback() can be used to yield secondary functions without blocking the main thread. It can be used in the following way, complemented by the optimal fallback behavior to a classic setTimeout():

if ('requestIdleCallback' in window) {

window.requestIdleCallback(() => this._loadDelayedScripts());

} else {

setTimeout(() => this._loadDelayedScripts(), 0);

}scheduler.yield(), optimal but not yet widely supported

This is a brand-new native yielding API, currently only available in Google Chrome origin trial. The promise couldn’t be more appealing: scheduler.yield() aims to enable JavaScript functions to be executed without blocking rendering or user interaction.

The method relies on the generation of a queue, into which it adds the tasks to be subsequently executed. Its main advantage over setTimeout() is its ability to preserve the notion of execution priority. In particular, operations not executed during a cycle are reintegrated at the head of the execution queue, and not at the end.

There’s no doubt that in a few years’ time, this will be the main solution for disgorging an overloaded main thread, and improving INP accordingly.

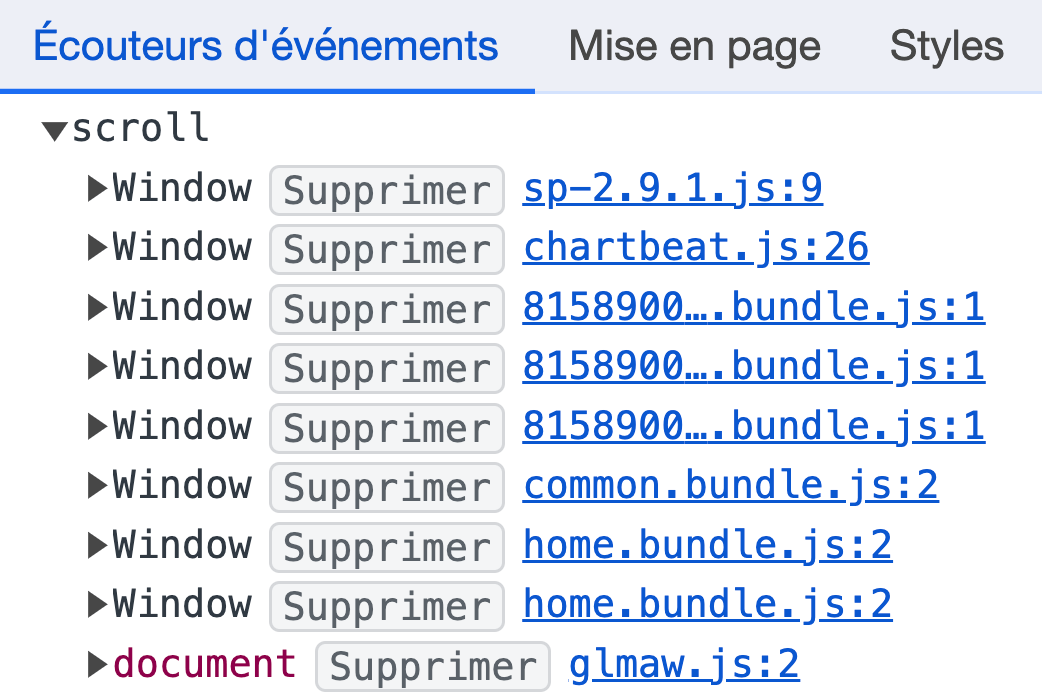

Reduce the number of Event Listeners

INP measures latencies related to user interactions. These can come from native functionalities, such as the activation of a checkbox on a form. But they are often defined in JavaScript via so-called Event Listeners. These are used to trigger a JavaScript “callback” function following a click, touch of a screen or keystroke.

The higher the number of Event Listeners, particularly if they target generic elements such as <a> or <input> tags, the poorer the INP. Each time it interacts with an element associated with an Event Listener, the browser will execute the JavaScript code associated with it. Worse still, the default execution behavior of these scripts is synchronous, which increases the risk of generating Long Tasks.

Fortunately, it is possible to change this behavior to passive execution. To do this, use the “options” argument, in which a passive key with the value true is defined. Please note, however, that this implementation does not allow you to modify the browser’s default behavior via the JavaScript function preventDefault(). Here’s an example of code that adopts this best practice:

[].forEach.call(document.body.querySelectorAll("a[target="_blank"]"), function(el) {

el.addEventListener(touchEvent, function(e) {

alert("You're leaving our site!");

}, {passive: true});

});Providing feedback on interactions

We explained earlier that certain Ajax-based functionalities can have a major negative impact on the INP. In this specific case, it’s not just script execution time that impacts the metric, but also and above all the need to perform an exchange with the server before displaying anything on the screen. From the user’s point of view, this remains a waiting time in the same way as a classic Long Task.

The solution to this type of slowness is to systematically give feedback to interactions via the UI. This is an elementary UX best practice, but one that is unfortunately often overlooked. Even before making your XHR request or executing your callback function, plan to inject an animated CSS loader or even a silly “Loading in progress…” text.

The fields of application for this recommendation are numerous:

- Add to cart button that checks product availability in the background before validation;

- Positive or negative vote button, which increments a field in the database before displaying the updated number;

- “Load more…” button to manage dynamic pagination.

Delay execution

It often happens that, even after scrupulously implementing the good practices developed above, a page or a website as a whole continues to suffer from an excessively high INP. If all the JavaScript present is actually used, there’s one last resort: delaying execution. The idea is simple: rather than executing all scripts on initial load, only those strictly necessary for the site to function are executed initially.

Other scripts, especially third-party scripts, are only downloaded and executed at a later stage. By splitting execution into two separate windows, you greatly reduce the risk of saturating available CPU resources. There are a number of possible behaviours here:

- pure delay via a

setTimeOut(), usually 3 to 5 seconds after theDOMContentLoadedJavaScript event; - triggering on user action such as click, scroll, keyboard input (…) via the addition of an Event Listener (my preferred solution);

- triggered by a scroll threshold in the page or the entry of a zone within the active viewport, useful for example if a heavy script is only used in the footer.

Rather simple to set up, this functionality is even intuitively available to WordPress CMS users, thanks to extensions such as WP Rocket and Perfmatters.

Setting up facades

It sometimes happens that certain very heavy scripts are only used by a minority of visitors. This is typically the case with a Chatbot, whose tooltip proudly occupies the bottom right-hand corner of the browser, but with which only 0.5% of users interact. Or a video embed, the majority of which will not be read. The question here is: should we accept to degrade the experience of all our visitors in order to meet the expectations of just 0.5% of them?

This is the question that a “facade” answers. In concrete terms, this means reproducing as faithfully as possible the interface elements to which Internet users are accustomed, such as a floating bubble for a chatbot, or a cover thumbnail with a play button for a video embed. The corresponding script is downloaded and executed only when the user interacts with the trigger element, in complete transparency.

Ideal for the two cases presented, this solution nevertheless requires more development. HTML and CSS must be integrated to match the final result as closely as possible, while on the JavaScript side, an Event Listener must be associated with the triggers to load the script in the background when the time comes. Here’s an example of the script used to load a Typeform form:

var typeform_loaded = false,

typeform_load = function() {

return new Promise(function(resolve, reject) {

if(typeform_loaded === false) {

const s = document.createElement('script');

let r = false;

s.src = "https://embed.typeform.com/next/embed.js";

s.async = true;

s.onerror = function(err) {

reject(err, s);

};

s.onload = s.onreadystatechange = function() {

if (!r && (!this.readyState || this.readyState == 'complete')) {

r = true;

typeform_loaded = true;

resolve();

}

};

const t = document.getElementsByTagName('script')[0];

t.parentElement.insertBefore(s, t);

}

});

};

[].forEach.call(document.body.querySelectorAll(".load-typeform"), function(el) {

el.addEventListener("mouseenter", typeform_load, {passive: true});

});Relying on a Service Worker or Web Worker

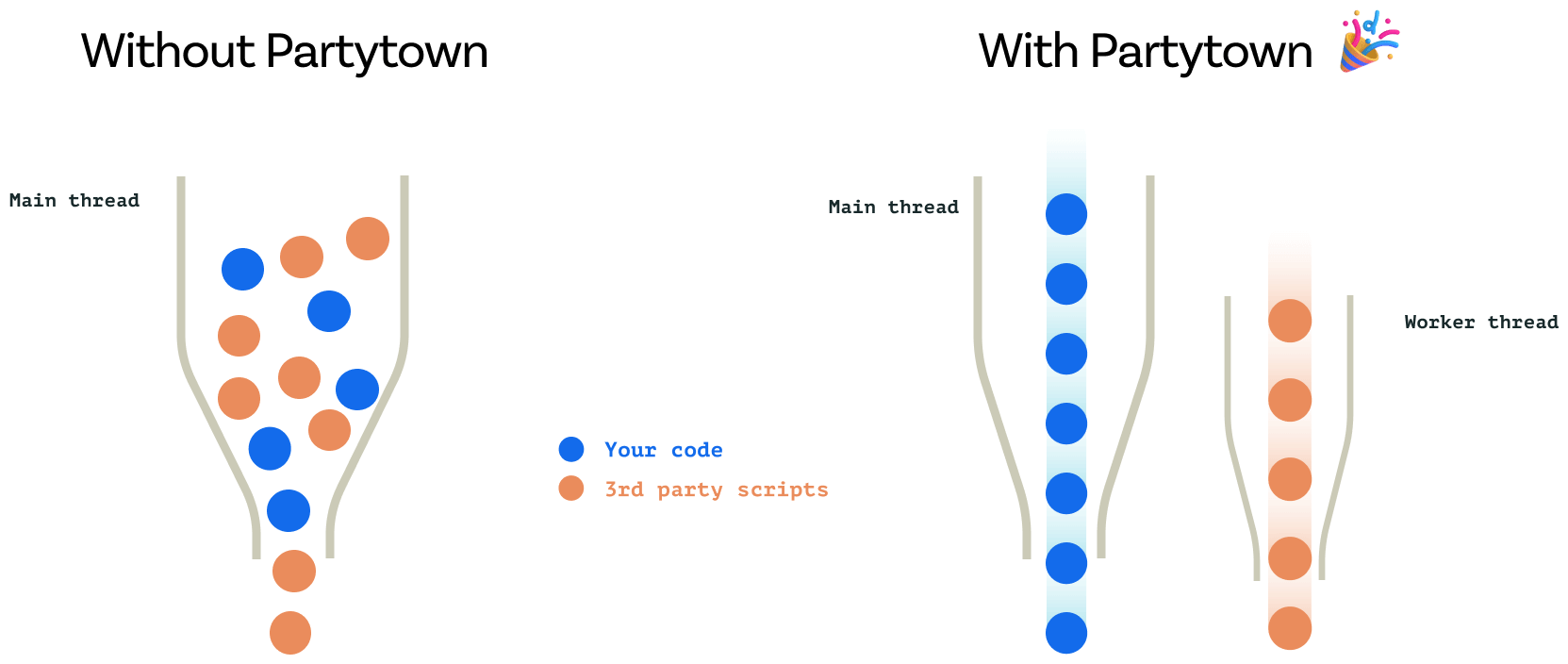

When it comes to heavy-duty tasks that need to be performed via JavaScript, it’s finally possible to opt for a different implementation. Service Workers and Web Workers both enable scripts to be executed asynchronously, using a different processor thread to that of the pages, although their use cases are very different.

One of the most ambitious tools in this field is Partytown. By ingeniously hijacking certain browser mechanisms, it makes it possible to execute third-party scripts in a Service Worker, with full support for native APIs. In particular, it is compatible with Google Tag Manager, Google Analytics, Facebook Pixel and Hubspot, all of which are notorious for their negative impact on Blocking Time and INP.

The result is much greater interactivity of the pages themselves. Beware, however: Service Workers also have certain limitations, the most important of which is an average latency of 300 milliseconds on initial loading.

Avoid using alert() methods

The JavaScript methods alert(), prompt() and confirm( ) are a simple and effective way of displaying a message or requesting confirmation from a user. However, they execute synchronously, blocking the page’s main thread of execution. In concrete terms, this means that the interaction is considered “in progress” until the user clicks on the close button.

Since this behavior can penalize the INP without frustrating users, it’s highly likely that a future update of Core Web Vitals will change the situation. But for the time being, this remains a crucial point of vigilance when it comes to mastering INP.

Optimizing CSS

Limiting the use of the universal selector

Fortunately, JavaScript is not always the only culprit when INP soars. It can also be linked to certain technical choices made by your integrator, front-end developer or the creator of your CMS, theme or extension. One of the most common errors is the overuse of the universal CSS selector, indicated by an asterisk (*).

When the browser’s CSS interpretation engine encounters a selector that is partially or totally based on this selector, it has no choice but to test its relevance for all DOM nodes. This is a costly operation in terms of CPU resources, and therefore potentially generates penalizing latencies on the INP side. So avoid this selector like the plague, and systematically opt for more precise selectors, ideally a class.

Note in passing an exception that is widely implemented on recent sites: the use of this selector to globally activate the border-box CSS rendering mode. In concrete terms, this translates into a CSS declaration of this type:

*,::after,::before {

box-sizing: inherit

}Using high-performance animations

Talking about interactions without mentioning CSS animations would be a serious breach of web etiquette. All the more so since, on the INP side, they can also play a significant role. This is the case in two distinct but equally penalizing situations:

- continuous animations, such as an animated page background. These can generate high and constant resource consumption, which will directly impact the INP throughout the user’s browsing experience;

- hover animations, such as a zoom, background color change or

box-shadow. These, too, can have a negative impact on the INP, but often to a lesser degree due to their one-off activation.

To avoid negatively impacting performance, the first best practice is to use transform and opacity based animations exclusively. If you come across width, margin or top in a @keyframes declaration, you’ve got something to worry about: these are problematic “non-compound” animations. This will force the browser to go through the Layout, Paint and Compositing phases described above.

This is not an arbitrary decision, but the consequence of an architectural choice made by browsers: opacity and transform benefit natively from hardware acceleration, and are therefore processed by the GPU rather than the CPU. In concrete terms, not only will these animations be much smoother visually, they will also not generate Long Tasks.

Relieve initial rendering

Whatever the number of CSS scripts and animations on a page, an unoptimized DOM, i.e. one that is voluminous and deep, can have a negative impact on the INP. This is because during initial rendering, as well as during user interaction, the rendering engine’s calculations will be more costly, and therefore more time-consuming. Whenever possible, especially when developing a custom site, it is therefore essential to generate a minimum volume of DOM.

Such an objective is generally difficult to achieve, however, and the 800 and 1400 node thresholds considered as the medium and high levels not to be exceeded, are quickly reached. Fortunately, this doesn’t mean that all is lost. There are CSS properties that enable the browser to render more efficiently. One of the most powerful is “Lazy Rendering”.

By setting the content-visibility CSS property to auto, the browser simply ignores the relevant portion of the page, rendering it only when it’s close to the viewport. However, there’s a major drawback: since the browser doesn’t perform any rendering calculations, it needs to be provided with the final dimensions, otherwise it will generate jerky scrolling. This is simply done via the contain-intrinsic-height CSS property.

Here’s an example of how to set up Lazy Rendering on a footer. Footers are excellent candidates, as they often have more than 100 DOM nodes and are, by definition, visible at a relatively late stage and by a minority of visitors.

.site-footer {

content-visibility: auto;

contain-intrinsic-height: 400px;

}

@media(min-width: 768px) {

.site-footer {

contain-intrinsic-height: 250px;

}

}Relieve rendering for interactions

While Lazy Rendering is relevant for improving rendering times at initial loading, and therefore mechanically improving INP, it doesn’t help at all during subsequent browsing sessions. For this purpose, there’s a second native feature called “CSS containment”. This limits browser recalculations when an interface element changes: scrolling a slider, opening a summary or simply changing a background color.

Setting up CSS compartmentalization allows you to influence the way the browser traverses the DOM tree when a visual change is made, as previously mentioned. In concrete terms, this means defining, via CSS selectors, a sort of watertight barrier that will prevent the browser from going beyond it. Armed with this information, it will no longer try to find out whether a modification within the container can have an impact on its parents.

Since a good example is better than a lengthy explanation, here’s a code snippet that allows you to partition the sections present in the content area of a page. Note that for maximum impact, the CSS contain property should be set to content, but that in many cases, for reasons of compatibility, the less complete and therefore less effective layout style is used.

#main-area > section {

contain: content;

}Reduce interaction on “dead” zones

A final CSS tool can be used to further control certain interaction behaviors, and therefore impact the way INP is calculated. This is the pointer-events property, which, when set to none, can make a DOM zone non-interactive. This is a tool to be handled with care, as it can, if misused, penalize the User Experience.

In concrete terms, deploying pointer-events: none; on a div will systematically generate very short interactions (around 16 ms) with, in return, the impossibility of triggering any action in JavaScript or CSS. There are few situations where such an implementation can really add value.

What to do if INP remains problematic after optimization?

If INP remains problematic despite the implementation of the recommendations detailed above, this should lead you to reconsider your technical choices and front-end stack in depth. This means reassessing not only the frameworks and tools used, but also the site’s overall architecture.

To be totally explicit, improving the INP may require a complete site redesign. Such a decision should not be taken lightly, but may be essential to meet modern web performance expectations. It’s crucial to select technologies and tools that not only meet current needs, but are also capable of ensuring the responsiveness demanded by INP.

This is a particularly tough challenge for certain SPA-type JavaScript frameworks, even if most of them have made significant leaps forward in this area in their latest updates.

Historically, Core Web Vitals has focused on metrics that are potentially optimizable in any environment, such as LCP and CLS. This should enable site publishers to adapt to these new constraints and deploy a continuous improvement approach. The INP, on the other hand, marks a turning point, which could lead to a break with established practices.

It’s a demanding metric, reflecting users’ evolving expectations of increasingly interactive and responsive web experiences. Mastering the INP is therefore not only an indicator of technical performance, but also a sign of adaptation to current and future web trends.

Web performance in the future

I hope this adventure through the maze of the INP has satisfied your curiosity and, why not, sparked your interest in the discipline. What’s important to remember is that at the heart of Google’s approach lies a clear mission: to measure, as accurately as possible, what Internet users really feel when they browse a web page. Think of these metrics as barometers that attempt to capture as closely as possible our comfort and ease as we explore online.

What’s also important to remember is that making our users’ browsing experience more pleasant is not just a fine initiative. It’s also an excellent lever for a site’s business and reputation. Whether you’re selling products online, offering advertising content or anything else, ensuring that visitors navigate with ease and pleasure is a strategic, judicious and always beneficial choice.

For skeptics and those who swear by Core Web Vitals alike, let’s remind ourselves of the real place of web performance: one of the very many factors among the hundreds that come into play in Google’s unfathomable ranking algorithm. The important thing to bear in mind is that it distinguishes quality sites from a multitude, the latter becoming ever broader and more qualitative with the growing use of artificial intelligence and tools such as ChatGPT. Webperf acts like a lighthouse, guiding users through the storm to content that’s really worth exploring.

Now that INP has been integrated into Google’s Core Web Vitals, the hardest part for many is yet to come. While some have already had the opportunity to adjust, improve and fine-tune the performance of their web pages by taking advantage of the Experimental period, others are still a long way from reaching the 200 millisecond mark.

Faced with this challenge, you can choose to make the most of the avenues detailed above, or entrust the task to experts like Agence Web Performance. We focus not just on INP, but on all the factors that influence performance and user experience. Our rigorous methodology and cutting-edge tools ensure that your website not only complies, but excels in all aspects of web performance.